How Did Google Stop Unethical Link Building

-

Aaron Gray

- Blogs

-

October 26 , 2022

October 26 , 2022 -

11 min read

11 min read

Name one search engine without thinking. If you say Google, well, we can’t blame you.

Most articles about search engine optimization (SEO) mention Google instead of the general term ‘search engine.’ For the record, it isn’t the only search engine in the world; Russia has Yandex.ru, China has Baidu, and South Korea has Never. But considering that these search engines emerged later, they probably wouldn’t have been possible without Google’s insight.

One reason for Google’s success story is that it releases a prototype and improves it with every update. Google Search is one example, starting with a basic algorithm and adding new features over time. It knows how to keep up with the times and adjusts accordingly, just as any modern business – big or small – should.

For example, as more people became increasingly reliant on the internet, Google saw the need to change its algorithm to serve people’s needs better. As such, it cracked down on SEO practices no one thought were an inconvenience, one of which being unethical link building. In this article, we’ll discuss how Google stopped this age-old practice.

The Black Hats of Link Building

As a company specialising in link building, we like to talk about how beneficial link building can be—like, a lot. Now, saying that Google wants proper link building isn’t entirely accurate. In their exact words, it will only penalise websites that try to game the search engine’s algorithm. If that isn’t your style, then you’ll be fine.

Nevertheless, discerning what’s right or wrong in link building is a must. Whether you knew or didn’t, a violation is a violation in Google’s eyes, and getting ranked again after a penalty will take a website its entire life. We’ve already discussed common black-hat link building practices in a past blog post, but let’s briefly review them again.

1. Poorly written content

This practice can’t get any more straightforward. Any piece or post that hardly contributes to a user’s search for answers has no business appearing in Google’s search engine results pages (SERPs). On top of that, people who have viewed such content won’t be able to take the site seriously, resulting in a drop in its reputation.

Google’s Search Quality Evaluator Guidelines describe what quality evaluators have to look for in high or low-quality content. You can click the above link to go in-depth, but here are a few red flags signifying the latter.

-

-

- Inadequate expertise, authoritativeness, and trustworthiness

- Unsatisfying amount of helpful main content on the page

- Too many ads interfering with users’ perusal of the content

- The main content title being too exaggerated or shocking

-

These are just some of the things to watch out for.

2. Private blog networks (PBNs)

A PBN consists of a network of ‘feeder’ websites feeding link juice to the parent or ‘money’ site. Google began taking action against these link building models in 2014 because the links being pushed to the parent site were unnatural. In addition, PBNs give websites an unfair business advantage over those practicing responsible SEO.

3. Low-quality backlinks

Many website owners don’t care about getting quality backlinks as long as they’re backlinks. The problem is that Google disagrees with this mindset. Quality backlinks should help users with their search, not assist the business in gaining exposure on SERPs.

Low-quality backlinks come in all shapes and sizes. However, the five most apparent are backlinks that come from:

-

-

- Spammy websites

- Paid link schemes (known as ‘link mills’)

- Poor-quality press releases

- Websites unrelated to the business’s industry

- Blog comments and discussion thread posts

-

These can damage your rankings greatly. It’s best to avoid them when link building.

4. Black-hat 301 redirects

While a 301 redirect isn’t a black-hat SEO practice, it’s prone to misuse and abuse. It isn’t unusual for websites to buy as many expired domains containing backlinks from websites with high authority ratings as possible. Because of this, any valuable link juice they still have will be redirected to the buyer’s website.

No One Bothered Until Google

Link building had been around way before Google, even before its predecessor, Backrub, even existed. But in the age of search engines like WebCrawler, Infoseek, and AltaVista, its original purpose was to lead traffic.

It’s not like the SEO community back then didn’t want to implement a set of guidelines – they didn’t see an actual need. Remember, this was when websites were created mainly via Notepad. An algorithm that automatically flags violations would’ve been costly and resource-intensive, if not impossible, which search engines didn’t have the appetite for at the time.

It wasn’t until Google’s takeover of Yahoo’s search engine system in 2000 that it decided to take SEO seriously. The world later watched how this nascent search engine brand would take SEO to the digital age – and it starts with an all-too-familiar feature.

PageRank: Google’s Vote of Confidence

In a way, you could say that PageRank was the heart and soul of Google, but it’s more than that. The fact that we’re still talking about PageRank even past its ‘official’ death in 2016 implies its indispensable value to SEO (just so we’re clear, it’s far from dead).

PageRank was ahead of its time when it was introduced. No other search engine back then had an algorithm as advanced, let alone a formula for a page’s ranking as convoluted as this:

PR(A) = (1-d) + d (PR(T1)/C(T1) + … + PR(Tn)/C(Tn))

Don’t worry about taking note of this (unless you’re really into data science). All you have to remember is that this is how Google gives a particular page its vote of confidence. PageRank assigns a rating between 1 and 10, expressed in log base 10. A page with a PageRank of PR2 may be ranked between 1,000 and 10,000 in the SERPs.

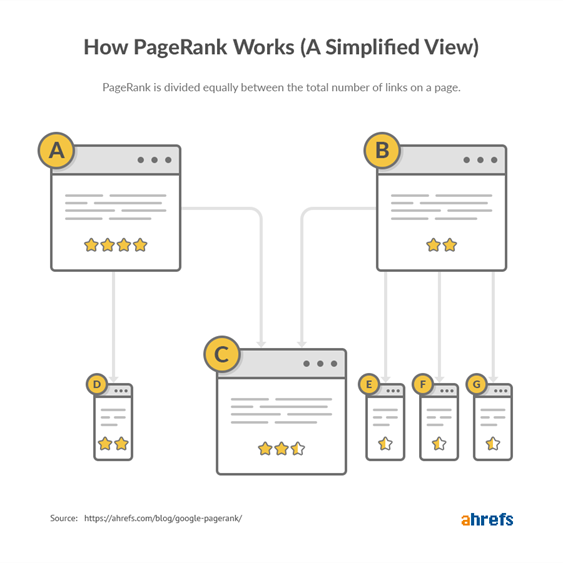

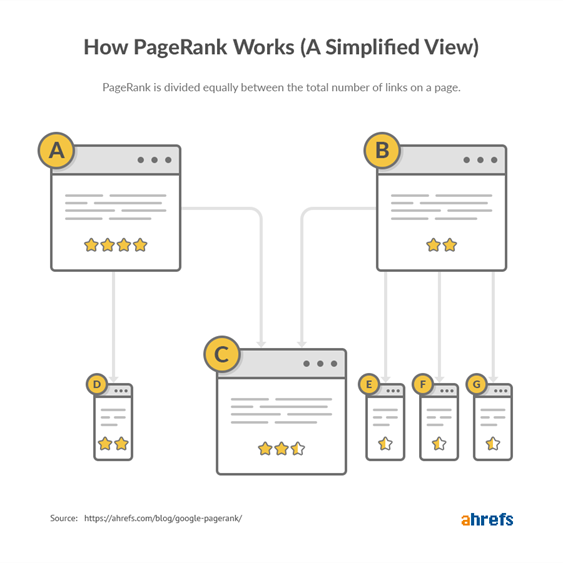

But if you want a general idea of how it works, it all boils down to the number of inbound and outbound links on a page and their respective PageRank ratings. Here’s a diagram courtesy of Ahrefs (click here for the source).

The algorithm is also designed to diminish every link’s value for every hop it makes. This way, a direct backlink would have more value than a backlink that had to hop several times to get to the page. Here’s another simplified graph from the same Ahrefs source.

Google designed a toolbar (now defunct) so that website owners can determine how well their websites perform. With the advanced algorithm, PageRank grew in popularity among website owners and SEO experts.

If that’s the case, then why did the company pull the plug on PageRank in 2016? Going by its official statement, Google said the internet had advanced too far for the algorithm to be of use. However, some experts refute that claim, believing that PageRank possesses one major flaw.

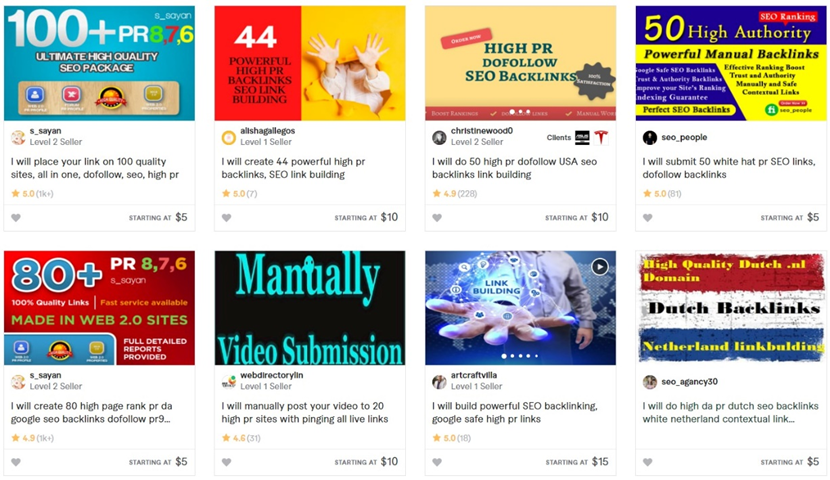

Remember when we mentioned that a page’s PageRank depends on the number of inbound and outbound links? Some crafty businesspeople used this flaw to game the system by spamming as many links as their clients want. Just search for ‘pr links’ on sites like Fiverr for example.

Though still a factor in SERP rankings, PageRank was no longer accessible to website owners and SEO experts after 2016. It took link spamming down with it, making this black-hat practice more difficult (though some examples remain persistent). The industry has developed alternative metrics since, but none are official replacements for PageRank.

Panda: Content quality, first and foremost

Around the 2010s, Google had to deal with another SEO headache: content farming. This model produces content that talks about various topics but adds next to nothing in terms of value. Their only purpose is to help websites rank higher on SERPs by integrating high-value keywords into the article or post.

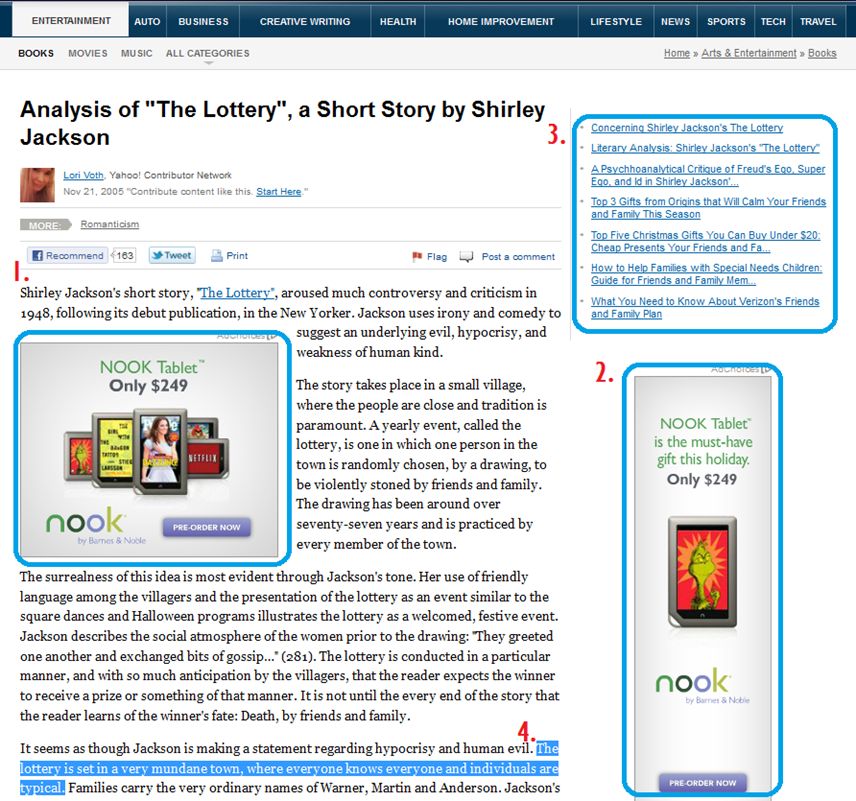

This resource from the Austin Community College’s Library Service vividly details what content from such farms looks like. It typically contains four traits:

- Grossly generic tone of writing

- Plenty of ads on the page

- Long list of links on the page

- Sections lifted from other sources without citation

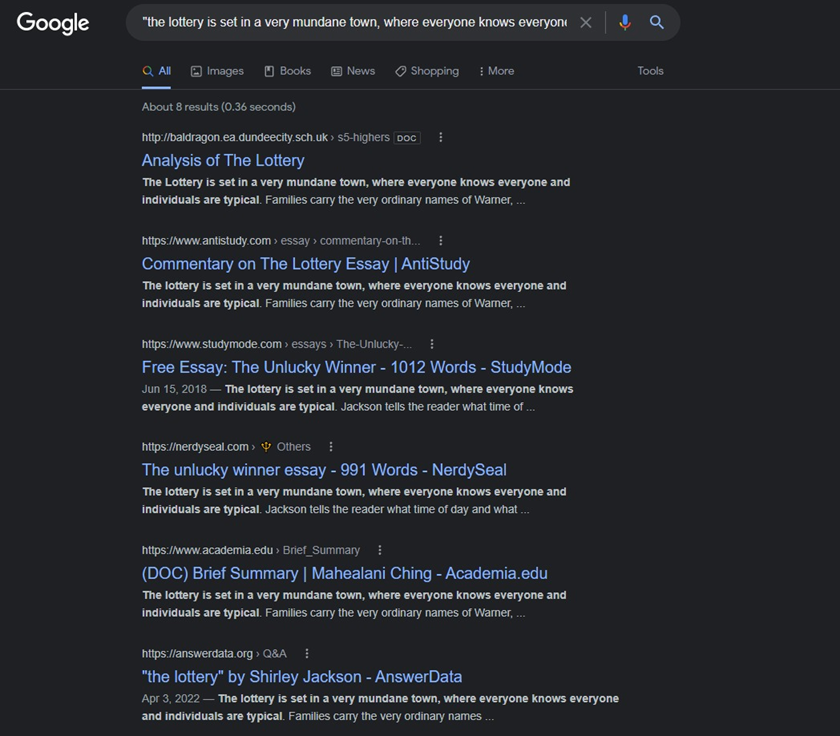

These days, you almost can’t get away with plagiarism. We say ‘almost’ because some people still do it and rank. Try Googling the highlighted part but enclosed in quotation marks, and you should get results like this:

Unfortunately, content-farmed results were everywhere around the 2010s. When Google rolled out its Caffeine update in 2009, it noticed that the SERPs indexed as much bad content as good content. It fouled up the SERPs, affecting people’s ability to pick out quality content.

Unlike the problem with link spam, this issue is qualitative. Google had to develop an update to the algorithm that allows it to judge if a piece of content is informative enough or total rubbish. It’s impossible to do this without getting feedback from evaluators and users, which Google did for the next few years.

The result is the Panda update, which rolled out on 23 February 2011. Contrary to popular belief, its namesake isn’t an actual panda but the man behind the update: a staff member named Navneet Panda. He developed the core features in Panda that nearly killed practices like content farming.

Still, like an actual panda, the update was vicious when provoked. Ranking drops swept through roughly 12% of all English queries within 24 hours, which freaked many website owners out. It got so serious that Google later had to point out that the update wasn’t a penalty.

The recovery would take several years for sites hit hard by the update. The graph below shows the organic traffic to The New York Times website, according to SEMRush metrics.

While the data starts from January 2012, nearly a year after the Panda-monium (pun intended), it clearly shows that organic traffic was virtually at rock bottom. It took the site an entire decade to get to more significant numbers. Considering The New York Times is one of the world’s most reputable news sources, it shows that Panda spared no one.

But if you think the storm subsided a year later, you’re mistaken. Panda is only half of a one-two punch that would stop unethical SEO manipulation.

Penguin: Make link building great again

As fearsome as Panda was, it mainly tackled content quality. Google had to create a new update to tackle two more pervasive problems in the SEO sphere: keyword stuffing and unethical link schemes. Let’s start with keyword stuffing by looking at the example below.

If you feel like the keyword ‘link building’ has overstayed its welcome in this paragraph, that’s keyword stuffing in a nutshell. It’s a rudimentary practice that depends on slapping a piece of content with as many keywords as possible to rank high in search queries. Such content provides a terrible experience for visitors.

Meanwhile, link schemes are an umbrella term for practices manipulating PageRank or a site’s SERP ranking. Some of these include:

- Paying or trading goods for PageRank-verified links

- Agreements to mutually link each other’s pages

- Employing automated link creation programs or services

- Large-scale content marketing with keyword-rich anchors

Despite Panda at work, Google still noticed plenty of spam in the SERPs. The Penguin update, introduced on 24 April 2012, serves as an additional net to catch anything that got past Panda. The effects weren’t as widespread as Panda, affecting only 3.1% of queries on launch day. Even Penguin 2.0, released on 22 May 2013, hit only 2.3% of queries.

But if there had been the biggest loser in this, it was PBNs. Google never intended to go after PBNs directly, as some might believe, but their nature of forwarding unnatural links would trigger Penguin’s system. Because of this, many SEO experts agree that making a PBN is too much of a risk in today’s SEO doctrine – and NO-BS couldn’t agree more.

Moving forward

Google’s improvements to its algorithm continue to this day, but they haven’t shaken the entire SEO industry in the magnitude PageRank, Panda, and Penguin had. Their later integration into the core algorithm has cemented a new reality. Websites can no longer return to the SEO tactics that worked wonders for them over 20 years ago.

Granted, some website owners remain adamant about sticking to the old ways, but the rewards won’t be as significant. It’s no longer about ensuring you get noticed first on search queries but providing the answers your customers are searching for. If you understand this, there’s no need to worry about what Google may have in store moving forward.

If you wish to learn more about the right way to build links, why not check out the NO-BS blog? We strive to keep ourselves – and you, of course – up-to-date with the latest in the SEO industry. It also discusses helpful tips and tricks for gaining a competitive edge in a fair manner.

Subscribe to Our Blog

Stay up to date with the latest marketing, sales, service tips and news.

Sign Up

"*" indicates required fields