9 Tips for a Successful and Effective Digital Marketing A/B Test

-

Aaron Gray

- Blogs

-

February 17 , 2023

February 17 , 2023 -

14 min read

14 min read

‘A/B testing’ is a term that comes up frequently among marketers and marketing experts alike. Every aspect of a business, from sales copies down to the price tags, requires some form of A/B testing to determine the best way to promote products and services. Without it, a business might be doing its marketing less efficiently than it believes.

It works like this: Your marketing team produces two versions of the same ad or page. Usually, A is the original, and B features a few differences from the original. The test involves showing both copies to visitors to your site and collecting data for at least a week or two. The copy with the higher conversion rate ends up being used.

A properly conducted A/B test results in more than just increased conversions. It can allow any marketing campaign to cater to changing trends, which are moving faster than ever, leading to fewer visitors ‘bouncing’ or leaving the site too soon. It also gives businesses more control over their marketing strategies; no more shooting for the moon when sending out emails.

That said, conducting an A/B test is more complicated than running two versions of one ad copy. I like to think of this as one of those scientific studies, complete with a hypothesis and in-depth analysis. A single mistake with the design can invalidate the whole study.

Because these tests can cost upwards of four figures, you’d want to get them right the first time as much as possible. Here are nine tips and tricks on pulling off a successful and effective digital marketing A/B test.

1. Think kaizen

Most experts cite gathering data as the first step in conducting any A/B test; technically, they’re correct. But I believe the process should start with the right mindset. What’s the point of doing something important if your head isn’t in the game?

A/B testing is all about constant improvement. There’s no perfect marketing strategy because the human marketer behind its development isn’t perfect. Thinking that your ads need no changes in a rapidlychanging world is a good way for a marketing strategy to flop.

This is the gist behind ‘kaizen,’ one of the business world’s major philosophies. The term means ‘improvement’ in Japanese, underscoring that there’s always room for businesses and how they do business to innovate. It’s a cycle, as this graph from TechTarget below shows.

Do some of these fundamentals sound familiar? That’s because the A/B testing cycle is more or less the same: gathering information, formulating solutions, testing said solutions, gathering and analysing results, and adopting the better solution. When done correctly, A/B testing grows more cost-effective the more often it’s performed.

2. Choose the right variable

A/B testing can assess the effect of up to four variables at best, but experts almost advise sticking to one variable. Testing multiple variables simultaneously reduces the test’s ability to determine which influenced performance the most. You’d want multivariate testing for that, but it’s a topic for another blog post.

Your choice of variables depends on whether you want to conduct on-site or off-site A/B testing. As the business’s site is the subject of on-site testing, its variables usually include the following:

- Headlines and sub-headings

- Call-to-action button and text

- Featured images

- Pop-up advertisements

- Content around and beyond the fold

- Positioning of details (e.g., pricing)

- Required fields in a form

On the other hand, off-site testing involves other marketing media like ads and emails. Typical variables for this process are the following:

- Email/ad structure

- Email subject line

- Embedded media

- Exact text in promoting offers

- Call-to-action button and text

- Call-to-action location in the email/ad

There are also external variables like the date and time of the campaign launch, which can skew the results if not controlled. This is why A/B testing is often done on the same day (unless its goal is to determine the best time to run the campaign).

3. Avoid overdoing metrics

This tip comes from Kaiser Fung, a professor of business analytics at Columbia University and author of several books on the subject. In an interview with the Harvard Business Review, Fung pointed out that monitoring too many metrics is one of three common mistakes businesses make when conducting A/B testing.

In doing so, Fung stressed that a business could risk creating spurious correlations, which implies two variables being linked but may be due to chance or an unseen third factor. If you’ve heard of the quote,‘Correlation doesn’t imply causation,’ it’s the same thing.

Let’s take the two boxes at the beginning of this piece as an example; you may notice that more visitors clicked Box B than Box A and infer that yellow is a better colour. However, you may miss the bug skewing the metrics data partway without sufficient retesting, especially if the test lastsa few months.

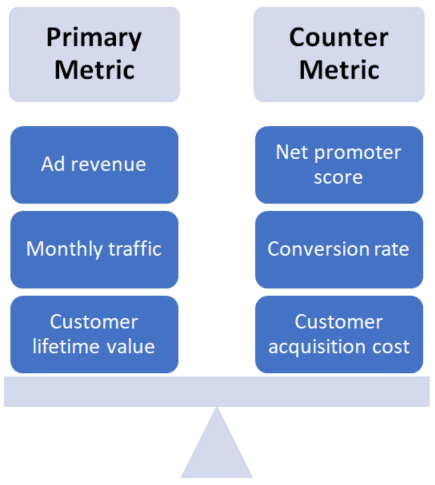

To mitigate the risk of spurious correlations, experts advise choosing a metric that’ll be affected by the change in the design directly. On-site metrics, such as bounce and click-through rates, are ideal options. Additionally, include another metric for counterbalancing.

4. Get the sample size right

Like any scientific study, A/B tests take time to generate results. As previously mentioned, A/B testing can take at least a week. However, the ideal duration depends on the sample size, which depends on three factors according to most A/B testing sample size calculators.

The first is the target page or ad’s baseline or current conversion rate. As the graph below shows, a higher baseline and noticeable improvement raterequire a smaller sample size for determining a visible improvement in the page’s performance.

For example, testing a page or ad with a 1% baseline to see a 5% improvement requires a sample size of over 450,000 visitors. On the other hand, testing a page or ad with a 25% baseline to see if it can achieve a 25% improvement requires a sample size of just 200 visitors.

The second is the minimum detectable effect (MDE), portrayed by the three lines in the graph. Experts recommend setting the MDE based on your allotted budget and resources. An MDE below 5% results in an A/B test that’s ‘underpowered’ or lacking power for its results to be statistically significant.

Speaking of which, the last – and perhaps most important – is statistical significance. A higher statistical significance translates to a lower risk of false or random results. Since it’s impossible to establish a connection between variables with certainty, researchers often settle for a statistical significance of 90% or 95%. As far as A/B testing goes, the figure can’t be any lower than 80%.

You can plug these numbers in a sample size calculator, such as this one from Optimizely. It’ll automatically generate a recommended sample size for testing every possible variation.

Unless your business is Facebook or a household name in a specific industry, your A/B test will be limited to the traffic your page or ad receives regularly. Reaching your target sample size in this scenario can take months. This brings us to the next tip:

5. Avoid rushing the test

Fung cited that many businesses don’t let their A/B tests run at their own pace, saying that such tests ‘evolve out of impatience.’ As I mentioned earlier, these tests are akin to scientific studies, which can only do more harm than good when rushed.

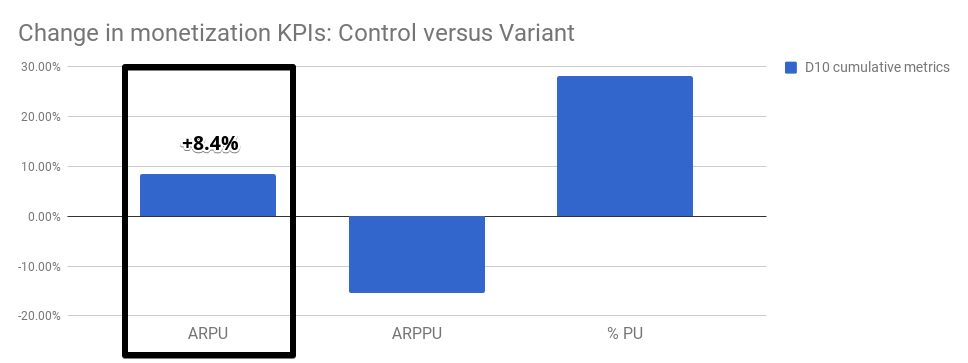

Case in point: Video game developer Kongregate wanted to introduce a pop-up feature in one of their mobile games that would allow players to purchase in-game items for cheap. It conducted an A/B test that lasted a month. Below are the recorded changes for the first 10 days of the test.

The data suggests that the version with the pop-up feature generated a 27% increase in player conversion and an 8.4% increase in average revenue per unit (ARPU). The only aspect it falls short on is the average revenue per paying user (ARPPU), where it performed worse than the original. But so far, so good, right?

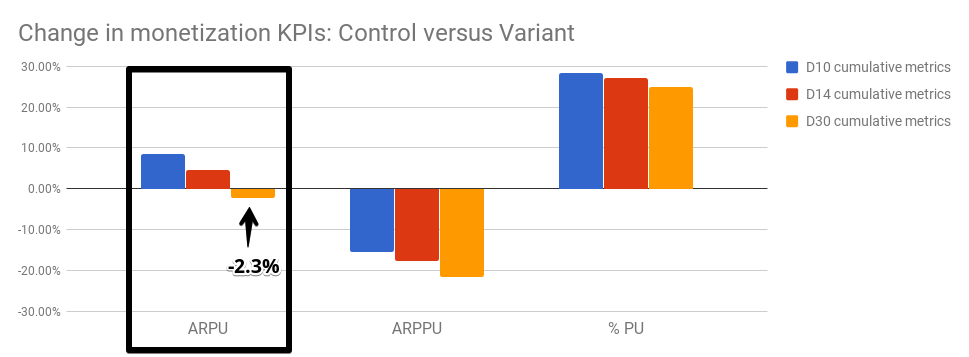

The developers decided to let the test play out for a little longer. By day 30, the version with the pop-up had performed worse than the original in both ARPU and ARPPU, to their disbelief.

Had Kongregate stopped testing on day 14, it might have implemented the feature, resulting in the game suffering from reduced conversions and revenue. As hard as it was for them because they had spent precious hours developing the feature, the company decided to keep the original.

Of course, there are some instances when the daily data from A/B tests don’t differ significantly, in which case, experts suggest starting over with new variables. As for how long your A/B test should run to produce valid results, like sample size, it depends on the following factors:

6. Craft a data-driven hypothesis

An A/B test’s hypothesis is important for two reasons, and I’ll let a quote from renowned nuclear physicist Enrico Fermi summarise it.

A hypothesisis primarily why A/B tests are more than randomly throwing two versions of an ad or email copy. It’s an ‘educated guess,’ an assumption based on whatever data or evidence was available before the test that’ll prove it right or wrong. Either outcome is desirable, as the quote above implies, because it opens up new possibilities.

In drafting a hypothesis, you can’t go wrong with the if-then-because statement. For example, if the published information above the fold receives more clicks, you may try moving it below the fold to see if it’ll get as many clicks. The test’s confirmation or contradiction of the hypothesis says a lot about visitor behaviour, among other things.

Hypotheses used to be mostly assumptions, but today, they’re more data-driven than ever. That’s right; we now must gather data to formulate a hypothesis, let alone conduct the actual A/B test. In a way, we have the former U.S. Secretary of Defence, Donald Rumsfeld, to thank for opening our eyes to ‘known unknowns’ and ‘unknown unknowns’ more than 20 years ago

Amid these unknowns, finding the shortest path to favourable results has become more vital than ever. A data-driven hypothesis can help keep sales teams focused on elements of utmost priority.

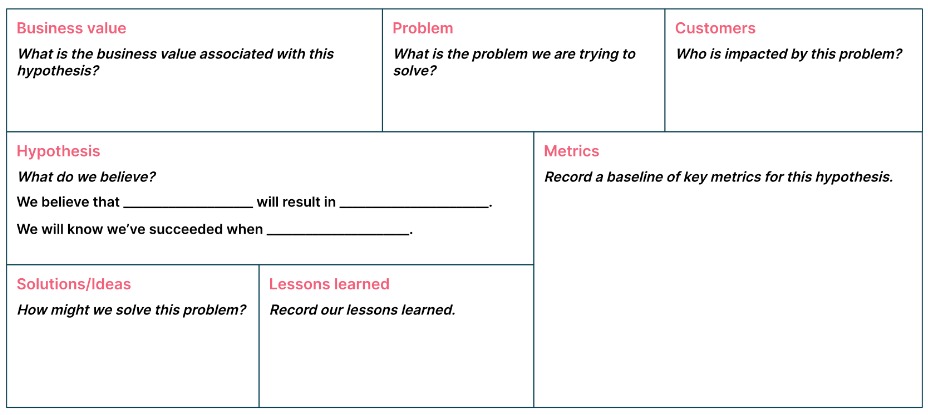

As for where to look for the needed data, third-party analytics tools make for excellent sources. You can also ask visitors to answer a short survey, provided it asks the right questions. Present your data in a hypothesis canvas like this template from Thoughtworks:

A/B testing should eliminate the need for guesswork, not to mention biases. It’s all the more reason to have a data-driven hypothesis.

7. Ensure SEO friendliness

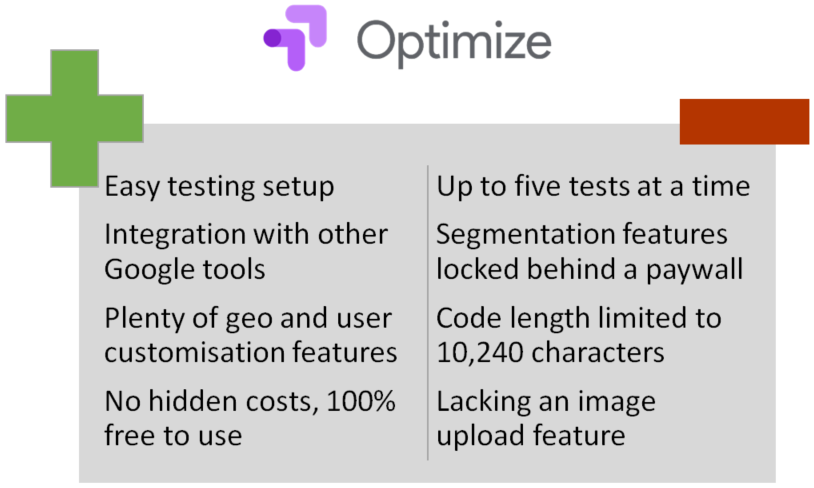

As the variations will run on the internet for some time, it pays for them to comply with current SEO guidelines. While Google states that A/B testing won’t impact search result rankings, it’s highly encouraged for enhancing overall user experience. It even operates its own testing tool, Google Optimize, which can be used in conjunction with Google Analytics.

In a Webmaster Hangout video, Google’s content quality advocate John Mueller asked testers not to give their crawlers a hard time indexing. He advised refraining from switching between versions randomly for crawlers to index landing pages properly and showing the version that most people will likely see in search results.

Moreover, Google’s Search Central blog advises the following best practices to avoid A/B tests being penalised by mistake:

- Avoid cloaking. This is just good general advice in SEO, not just in A/B testing. Cloaking or showing one version to visitors and another to crawlers is considered manipulation of the search engine algorithm, which is against Google’s guidelines. Always show the same content to the user-agent Googlebot as to the visitors.

- Use canonicalisation. Employ the rel=“canonical” tag to group variations of the same landing page URL and set the original URL as the correct one. Google doesn’t recommend using the noindex tag, as it might erroneously consider the original URL as a duplicate and drop it from the search results.

- Use temporary redirects. A 302 (temporary) redirect lets Google know that leading visitors to a variation of the original URL will only be for the duration of the A/B test. Let the original URL be allowed to be indexed.

- Stick to a timetable. I’ve explained that an A/B test shouldn’t be rushed, but letting it play out for longer than necessary will be interpreted as an attempt to manipulate the search engine. Update the content with the preferred version and remove all test elements once the A/B test is finished.

On a related note, Google said A/B tests should end once two weeks have passed (to compensate for web traffic variations) or if they have proven a 95% confidence that the variant will outmatch the original in conversion rates. However, it’s possible for these tests to run up to a few months, depending on the duration factors I’ve explained earlier.

That being the case, experts advise against running the tests for more than two months. Not only will Google see it as manipulating the search engine, but it also risksintroducing new biases into the test. As mentioned previously, if there isn’t a clear winner at this point, starta new test with new variables. There’s little harm in trying again.

8. Never test without a testing tool

Considering the amount of data involved in an A/B test, you’d want to have a powerful testing tool when testing begins. Like most effective digital marketing tools, A/B testing tools contain many necessary features in one program, from real-time data tracking to automating some steps.

The internet has no shortage of testing tools, either free or paid. Many marketing professionals swear by Google Optimize on the free category, enabling them to have a powerful A/B testing tool with no strings attached. It isn’t the most advanced, but it’s enough for most situations. Note that this is different from Google Optimize 360, which is a paid tool.

For tools with more oomph, you’ll have to fork out cash monthly. Among the biggest household names in this sector is Optimizely, being several times more powerful than Google Optimize in nearly all aspects. Its paid suites come in Web, Full Stack, or both.

Some tools are designed for conducting A/B tests on effective digital marketing campaigns on specific platforms like social media or e-commerce sites. For example, Facebook operates its own free testing tool integrated in its Meta Business Manager, but it doesn’t work on Instagram as of this writing.

9. Re-test, re-test, re-test

At this point, you’re probably convinced that A/B tests are more complicated than people give them credit for. I’ve discussed every important bit of A/B testing, from determining the correct metrics to setting the ideal duration and sample sizes. I’ve also explained the importance of using a testing tool in conducting these tests.

But even if you’ve followed these tips to a T, an A/B test is still prone to flukes. One thing that Fung noticed is that most businesses accept the results without sufficient re-testing. As a result, they become confused when the next A/B test yields contradictory results. He also stressed that the chance of a false positive remains huge even with a 95% statistical significance.

This is where we return to the first tip: having a kaizen mindset. A/B testing is never over for as long as a business endeavours to attract more customers. The end of one test sets the stage for the next one, complete with new variables. It doesn’t have to be the next day or in a few days, but a re-test every few months is good.

Final thoughts

A/B testing is by no means perfect. The outcome of one doesn’t entirely guarantee a consumer trend or business climate, especially when changes happen too quickly. However, the beauty of A/B testing is that it allows you to try something new when the old one doesn’t work out. It’s a difficult but necessary part of improving as a business.

You know what else is just as integral? Getting the right professionals to help you along. Check out this page to learn more how Pursuit Digital can build the most effective digital marketing strategies to suit your needs.

Subscribe to Our Blog

Stay up to date with the latest marketing, sales, service tips and news.

Sign Up

"*" indicates required fields