Google’s “Secret Sauce”

A recent Department Of Justice filing around Google Search got discussed in the industry, including a write-up from Search Engine Journal. The interesting part isn’t the legal angle. It’s what Google appears to treat as sensitive enough to protect aggressively. When a company draws a hard line on what it won’t share, it usually reveals what it sees as hard to rebuild and easy to exploit. In this case, the themes that show up map closely to what SEO teams already experience in the real world.

This post is our take on what’s worth learning from it, without turning it into a recap.

The simplest way to describe modern search

Search doesn’t behave like a library. It behaves like a feedback machine. Pages get discovered and processed. Pages get classified. Some pages stick, some pages fade. Over time, Google learns what tends to satisfy different kinds of searches based on what people actually do.

1) “Ranking” starts earlier than most people think

Most SEO conversations start at ranking. In practice, the hard work begins earlier:

Can Google understand what the page is?

Can Google trust the page enough to keep it?

Can Google justify spending resources crawling and re-crawling it?

Can the page compete in a space where thousands of similar pages already exist?

That framing changes the day-to-day priorities. It also explains why some sites keep publishing and still struggle to show up consistently.

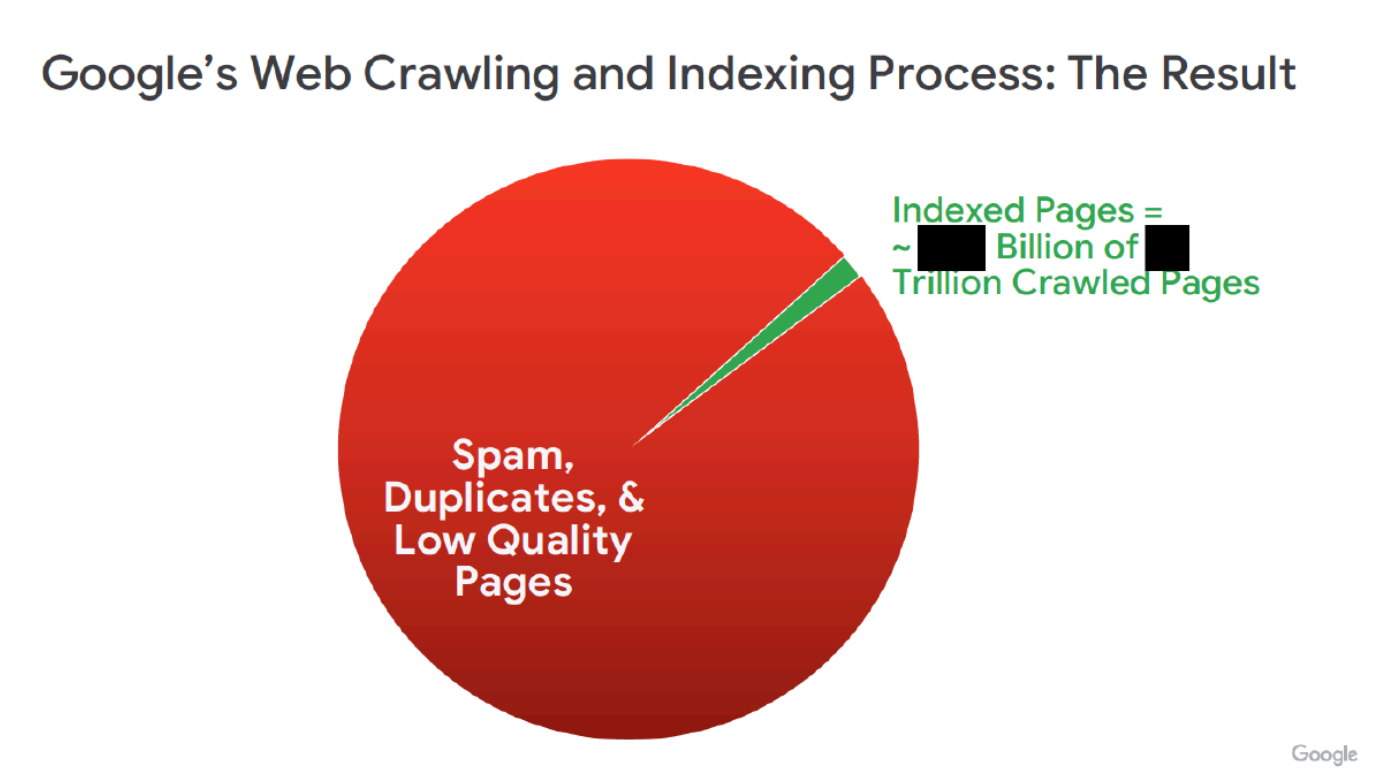

Page understanding and annotations sound like the real gate

The filing descriptions that circulated talk about “page understanding annotations,” plus systems for labeling spam and duplicates. You don’t need the internal names to recognize the behavior. Google crawls a lot of content and still chooses to store and serve only a portion of it in a way that’s stable enough to compete. That’s also why “my page is indexed” often feels like a confusing milestone. Indexing can exist, and visibility can still be inconsistent. A page can be present, while still sitting in a part of the system that doesn’t get surfaced often.

Where sites accidentally make this harder

This often comes up on sites that publish a lot of pages over time. Location pages start to resemble each other. Service pages shift keywords but keep the same structure. Programmatic builds repeat the same layout with minimal change in substance. Content clusters slowly drift into overlapping the same questions.

Individually, these pages can be perfectly fine. Taken together, they blur into one another. When that happens, it becomes harder for anything to stand out as clearly distinct or worth returning to. Sites that keep a tighter set of pages, where each one has a clear reason to exist, tend to feel more coherent and easier to place.

2) User behavior keeps showing up in how search improves over time

The filing coverage includes references to large stores of user activity around searches, plus learning systems trained on click and query patterns, refined using experiments and quality rater feedback. The easiest mistake here is to reduce this to a simplistic rule like “more clicks equals higher rankings.” Real systems don’t tend to work that way.

A more grounded interpretation is that behavior data can feed the learning loop that helps search understand outcomes. It can help models learn patterns that correlate with satisfaction. What tends to work is surprisingly straightforward. The page gets to the point early instead of warming up for paragraphs. It uses concrete details rather than vague statements. It anticipates the questions people usually have right after reading the main answer and addresses them in the same place. Examples show how the advice plays out in real situations, including where it applies and where it doesn’t. By the time someone reaches the end, the next step is clear without needing another search.

A lot of SEO content reads well on the surface but leaves the work unfinished. Pages that go a step further, by helping someone actually do the thing they came for, tend to hold attention longer and get reused more often over time.

Practical examples across common page types

A pricing page

People want to know what it costs, what is included, what changes by plan, what the catch is, and what the next step looks like. A pricing page that hides those answers behind vague copy creates extra searching.

A how-to guide

People want the steps early. They also want troubleshooting, options, and warnings. They do not want a long intro about why the topic matters.

A service page

People want to know who it is for, what it includes, what it doesn’t include, how long it takes, what they need to provide, and what a first step looks like.

A category page in ecommerce

People want clear filters, meaningful descriptions, and enough trust cues to choose without leaving the page four times.

3) Freshness reads like crawl strategy and storage strategy

The filing discussion includes references to freshness signals and timestamps like when a page was first seen and last crawled. That suggests a reality teams already feel: Google’s relationship with your site changes depending on how useful and current your pages appear to be. Some pages get revisited more often. Some pages get revisited less often. Some pages feel like “core references.” Some pages feel like one-offs. Freshness doesn’t mean “publish more.” Freshness means “keep the pages that represent current truth aligned with the real world.”

Freshness as maintenance, not a calendar

Some pages end up carrying more weight than others because people keep coming back to them as reference points. Product and pricing pages are an obvious example, as are core comparisons, documentation, high-intent guides, and pages that explain rules, eligibility, or industry specifics that change over time. When these pages drift out of date, the confusion shows up quickly.

The kind of maintenance that actually helps is usually concrete. Screenshots get replaced, steps get corrected, examples get updated, and outdated claims get removed altogether. Simply changing a publish date or making light edits rarely fixes the real problem. Pages tend to hold up better when the changes reflect real updates that improve accuracy and make the information easier to trust and use.

4) The spam and duplicate angle is a reminder about risk

The filing coverage emphasizes spam signals and the idea that exposing these would help bad actors bypass detection. That looks important because the system seems to be caring about patterns. A site can be honest and still resemble those patterns. That’s usually where “we did everything right” frustration comes from. The work becomes less about intent and more about footprint, clarity, and differentiation.

5) The overlap with AI visibility shows up in the same fundamentals

One detail in the legal discussion hints at a broader shift: the suggestion that user activity data could also be useful for training large language models.

That idea widens the lens beyond traditional rankings. Data about what people choose, trust, and spend time with has value wherever information gets surfaced, whether through search results or AI-driven answers. The same foundations tend to support both. Clear brand and entity context, consistency in how things are named and described, pages that can be referenced easily because they include concrete detail, and third-party mentions that back up what a brand claims all play a role.

In practice, SEO and AI visibility rarely operate as separate systems. They draw from the same pool of signals, just expressed through different interfaces.

What a modern SEO roadmap can look like under this lens :

A modern SEO roadmap often starts by being more selective. Many sites publish far more pages than they can realistically support over time. That usually shows up later as flat performance, confusing signals, and growing maintenance overhead. A tighter approach focuses on identifying which pages genuinely matter, the ones people rely on as references, and investing in making those pages more complete than anything else covering the same ground.

Content that performs well over time usually does so because it helps someone finish what they came to do. When a page answers the question clearly, fills in the obvious follow-ups, and removes the need for another search, people tend to linger, return, share it, and reference it later. That kind of usefulness compounds naturally.

Overlap is another area where many sites struggle without realizing it. Multiple pages end up answering the same question in slightly different ways, targeting similar phrases, or repeating the same promises with minimal variation. Over time, this creates internal competition and makes it harder for any single page to stand out. Cleaning this up through consolidation often brings more clarity than publishing new content ever does.

Maintenance also benefits from being intentional. Some pages are designed to stay relevant for years, while others reflect information that changes regularly. Treating both the same leads to wasted effort in some places and neglect in others. Pages that represent current truth, pricing, products, comparisons, policies, or fast-changing topics tend to benefit most from regular, meaningful updates, while genuinely evergreen pages can often be left alone until they show signs of age.

Teams usually don’t need special access to see whether this approach is working. Search data often highlights pages that get seen but rarely clicked, which can point to a mismatch between expectations and delivery. Analytics can surface pages where people arrive and leave quickly, especially when paired with shallow scrolling or low engagement. Support, sales, and internal search queries frequently reveal the same unanswered questions resurfacing again and again.

Sources

• U.S. Department of Justice vs Google – Antitrust case filings and appeal materials referenced in industry reporting

https://www.courtlistener.com/docket/18552824/1471/2/united-states-of-america-v-google-llc/

• Liz Reid - Google VP / Search

https://www.linkedin.com/in/elizabeth-reid-56356724/

• Google – Public documentation on search systems, spam policies, and quality signals

https://developers.google.com/search/docs/essentials